Install metric server on controller node

See https://github.com/kubernetes-sigs/metrics-server/releases https://github.com/kubernetes-sigs/metrics-server to download compactible versions

Obtain metric server YAML file

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

verbs:

- get

- apiGroups:

- ""

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- appProtocol: https

name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

replicas: 2

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=10250

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls

image: registry.k8s.io/metrics-server/metrics-server:v0.8.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 10250

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

seccompProfile:

type: RuntimeDefault

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

Apply metric YAML on controller

kubectl apply -f metric.yaml

Verify to see if metrics-server pod is running

kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-79949b87d-9qddx 1/1 Running 2 (24h ago) 5d23h

calico-node-2l24s 1/1 Running 2 (24h ago) 5d23h

calico-node-f5jxk 1/1 Running 3 (24h ago) 5d23h

calico-node-f9ztb 1/1 Running 1 (24h ago) 5d23h

calico-node-gpxxw 1/1 Running 1 (24h ago) 5d23h

coredns-674b8bbfcf-5zxmv 1/1 Running 1 (24h ago) 5d23h

coredns-674b8bbfcf-7f5wg 1/1 Running 1 (24h ago) 5d23h

etcd-kubnew-master01 1/1 Running 2 (24h ago) 5d23h

etcd-kubnew-master02 1/1 Running 1 (24h ago) 5d23h

kube-apiserver-kubnew-master01 1/1 Running 2 (24h ago) 5d23h

kube-apiserver-kubnew-master02 1/1 Running 2 (24h ago) 5d23h

kube-controller-manager-kubnew-master01 1/1 Running 2 (24h ago) 5d23h

kube-controller-manager-kubnew-master02 1/1 Running 1 (24h ago) 5d23h

kube-proxy-9xvl5 1/1 Running 1 (24h ago) 5d23h

kube-proxy-lfm8f 1/1 Running 2 (24h ago) 5d23h

kube-proxy-mpvsl 1/1 Running 3 (24h ago) 5d23h

kube-proxy-vpknq 1/1 Running 1 (24h ago) 5d23h

kube-scheduler-kubnew-master01 1/1 Running 2 (24h ago) 5d23h

kube-scheduler-kubnew-master02 1/1 Running 1 (24h ago) 5d23h

metrics-server-56fb9549f4-8vqdf 1/1 Running 0 44m

metrics-server-56fb9549f4-m9rsn 1/1 Running 0 44m

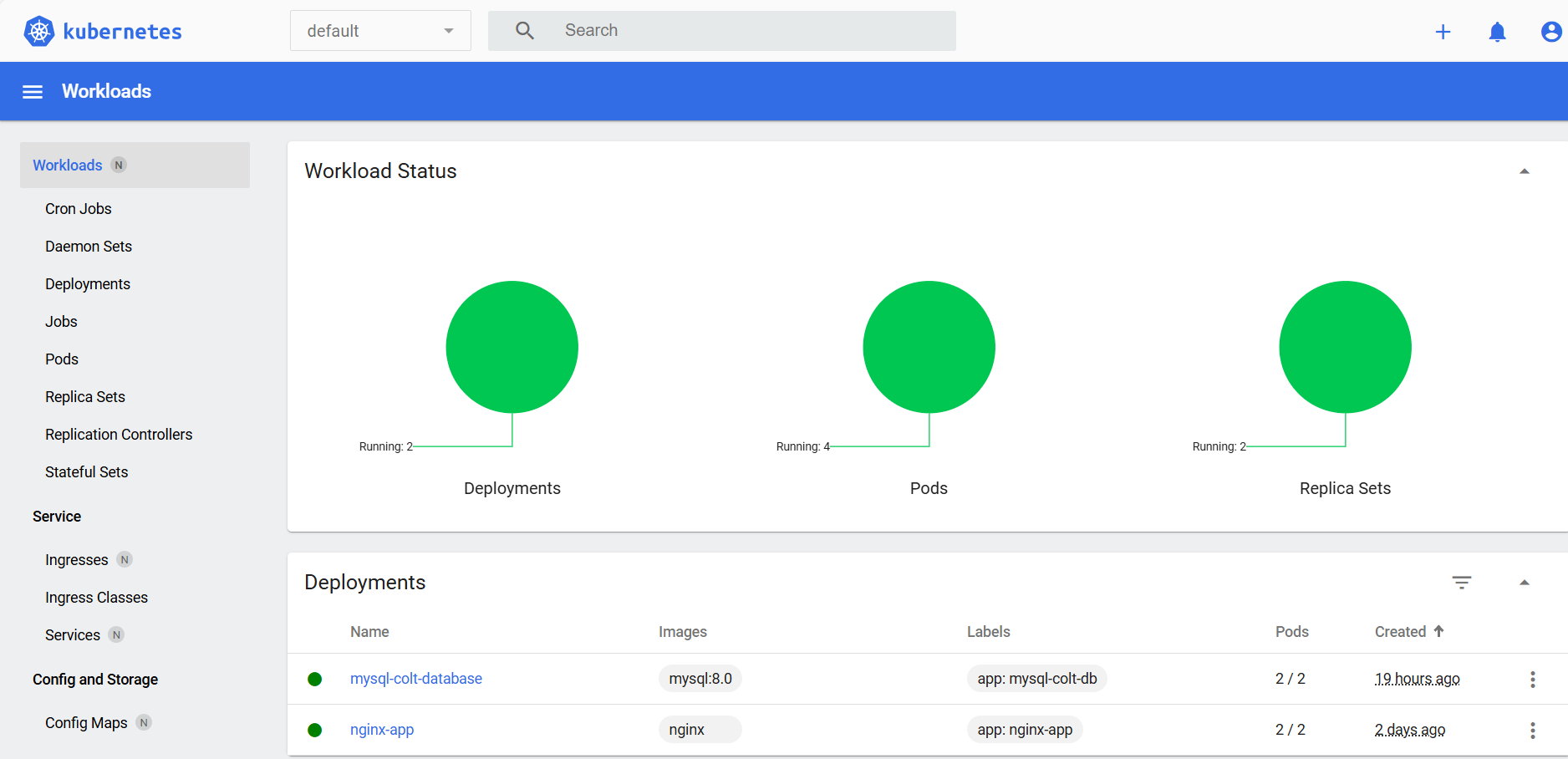

Note pod replia count before scaling

kubectl get pods

NAME READY STATUS RESTARTS AGE

mysql-colt-database-5bc5d55fc-gcxpg 1/1 Running 0 6s

mysql-colt-database-5bc5d55fc-lgvth 1/1 Running 0 6s

nginx-test-b548755db-jskkl 1/1 Running 2 (6m44s ago) 4d21h

Apply horizontal scaling to mysql database pod

Create following YAML mentioning min, max replicas, CPU, memory benchmarks

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: mysql-colt-scaler

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment # statefulset, replicaset

name: mysql-colt-database

minReplicas: 3

maxReplicas: 10

metrics:

- type: Resource # Pods, Object, External

resource:

name: cpu # cpu or memory

target:

type: Utilization

averageUtilization: 60

- type: Resource # Pods, Object, External

resource:

name: memory # cpu or memory

target:

type: Utilization

averageUtilization: 30

Appy it on controller

kubectl apply -f scaling.yaml

Verify added horizontal scale “mysql-colt-scaler”

kubectl get horizontalpodautoscalers.autoscaling mysql-colt-scaler

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

mysql-colt-scaler Deployment/mysql-colt-database cpu: 0%/60%, memory: 74%/30% 3 10 10 21m

Check Scaling events

kubectl get horizontalpodautoscalers mysql-colt-scaler

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

mysql-colt-scaler Deployment/mysql-colt-database cpu: 0%/60%, memory: 74%/30% 3 10 10 30m

kubuser@kubnew-master01:~/apps$ kubectl get horizontalpodautoscalers mysql-colt-scaler

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

mysql-colt-scaler Deployment/mysql-colt-database cpu: 0%/60%, memory: 74%/30% 3 10 10 30m

kubuser@kubnew-master01:~/apps$ kubectl get horizontalpodautoscalers mysql-colt-scaler -o wide

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

mysql-colt-scaler Deployment/mysql-colt-database cpu: 0%/60%, memory: 74%/30% 3 10 10 30m

kubuser@kubnew-master01:~/apps$ kubectl describe horizontalpodautoscalers mysql-colt-scaler

Name: mysql-colt-scaler

Namespace: default

Labels: <none>

Annotations: <none>

CreationTimestamp: Tue, 18 Nov 2025 10:26:42 +0530

Reference: Deployment/mysql-colt-database

Metrics: ( current / target )

resource cpu on pods (as a percentage of request): 0% (5m) / 60%

resource memory on pods (as a percentage of request): 74% (389166284800m) / 30%

Min replicas: 3

Max replicas: 10

Deployment pods: 10 current / 10 desired

Conditions:

Type Status Reason Message

---- ------ ------ -------

AbleToScale True ReadyForNewScale recommended size matches current size

ScalingActive True ValidMetricFound the HPA was able to successfully calculate a replica count from memory resource utilization (percentage of request)

ScalingLimited True TooManyReplicas the desired replica count is more than the maximum replica count

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulRescale 30m horizontal-pod-autoscaler New size: 3; reason: Current number of replicas below Spec.MinReplicas

Normal SuccessfulRescale 30m horizontal-pod-autoscaler New size: 5; reason: memory resource utilization (percentage of request) above target

Normal SuccessfulRescale 30m horizontal-pod-autoscaler New size: 7; reason: memory resource utilization (percentage of request) above target

Normal SuccessfulRescale 30m horizontal-pod-autoscaler New size: 10; reason: memory resource utilization (percentage of request) above target

Check newly spawned up pods

kubuser@kubnew-master01:~/apps$ kubectl get pods

NAME READY STATUS RESTARTS AGE

mysql-colt-database-5bc5d55fc-685lv 1/1 Running 0 31m

mysql-colt-database-5bc5d55fc-9dsk8 1/1 Running 1 (4m29s ago) 31m

mysql-colt-database-5bc5d55fc-bsjm7 1/1 Running 1 (4m34s ago) 31m

mysql-colt-database-5bc5d55fc-c5hzf 1/1 Running 0 31m

mysql-colt-database-5bc5d55fc-ft5hb 1/1 Running 0 31m

mysql-colt-database-5bc5d55fc-glh4r 1/1 Running 1 (4m34s ago) 33m

mysql-colt-database-5bc5d55fc-kz694 1/1 Running 0 31m

mysql-colt-database-5bc5d55fc-p7brg 1/1 Running 1 (4m29s ago) 31m

mysql-colt-database-5bc5d55fc-qntnb 1/1 Running 1 (4m29s ago) 33m

mysql-colt-database-5bc5d55fc-zc2v8 1/1 Running 1 (4m34s ago) 32m

nginx-test-b548755db-jskkl 1/1 Running 2 (4m29s ago) 4d21h

kubuser@kubnew-master01:~/apps$