Basic cluster build and configuraion guide

Covers Kubernetes cluster build with:

- One master

- Two worker nodes

OS and specs (tested on)

- Debian 13

- 8GB RAM, 20GB disk, 4 VCPU

Create three VMs and assign fixed IPs

For eg:

- kub-master – 192.168.1.35

- kub-worker01 – 192.168.1.36

- kub-worker02 – 192.168.1.37

Set hostname on each VM

sudo hostnamectl set-hostname "kub-master" # control node

sudo hostnamectl set-hostname "kub-worker01" # 1st worker node

sudo hostnamectl set-hostname "kub-worker02" # 2nd worker node

Update /etc/hosts file on each VM

192.168.1.35 kub-master

192.168.1.36 kub-worker01

192.168.1.37 kub-worker02

Update each VM

apt update && apt upgrade -y

Disable SWAP on each VM

sudo swapoff -a

sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

Install Containerd on All Nodes

cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

sudo sysctl --system

sudo apt update

sudo apt -y install containerd

containerd config default | sudo tee /etc/containerd/config.toml >/dev/null 2>&1

sudo sed -i 's/SystemdCgroup = false/SystemdCgroup = true/' /etc/containerd/config.toml

sudo systemctl restart containerd

sudo systemctl enable containerd

Add Kubernetes Package Repository

Adjust version accordingly. Here we are using 1.33 version

sudo mkdir -p /etc/apt/keyrings

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.33/deb/ /" | sudo tee /etc/apt/sources.list.d/kubernetes.list

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.33/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

Install Kubernetes on all nodes

sudo apt update

sudo apt install kubelet kubeadm kubectl -y

sudo apt-mark hold kubelet kubeadm kubectl

Set Up the Kubernetes Cluster with Kubeadm on contrller node

Login as any non-root user, say “kubuser” to run following.

Also adjust version accordingly.

sudo kubeadm init --pod-network-cidr=10.244.0.0/24

On controller node as non-root user (say “kubuser”) create config

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Verify Cluster

kubectl get nodes

kubectl cluster-info

Join worker nodes to controller node

sudo kubeadm join k8s-control:6443 --token <token> --discovery-token-ca-cert-hash <hash>

To regenerate token, use following command

kubeadm token create --print-join-command

Install network plugin, calico on all three nodes

With Kubernetes 1.33, calico version 3.29.3 goes fine.

kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.29.3/manifests/calico.yaml

Create symlink as following:

sudo ln -s /opt/cni/bin /usr/lib/cni

Verify calico pods status

kubectl get pods -n kube-system

Wait untill are in “Running” state.

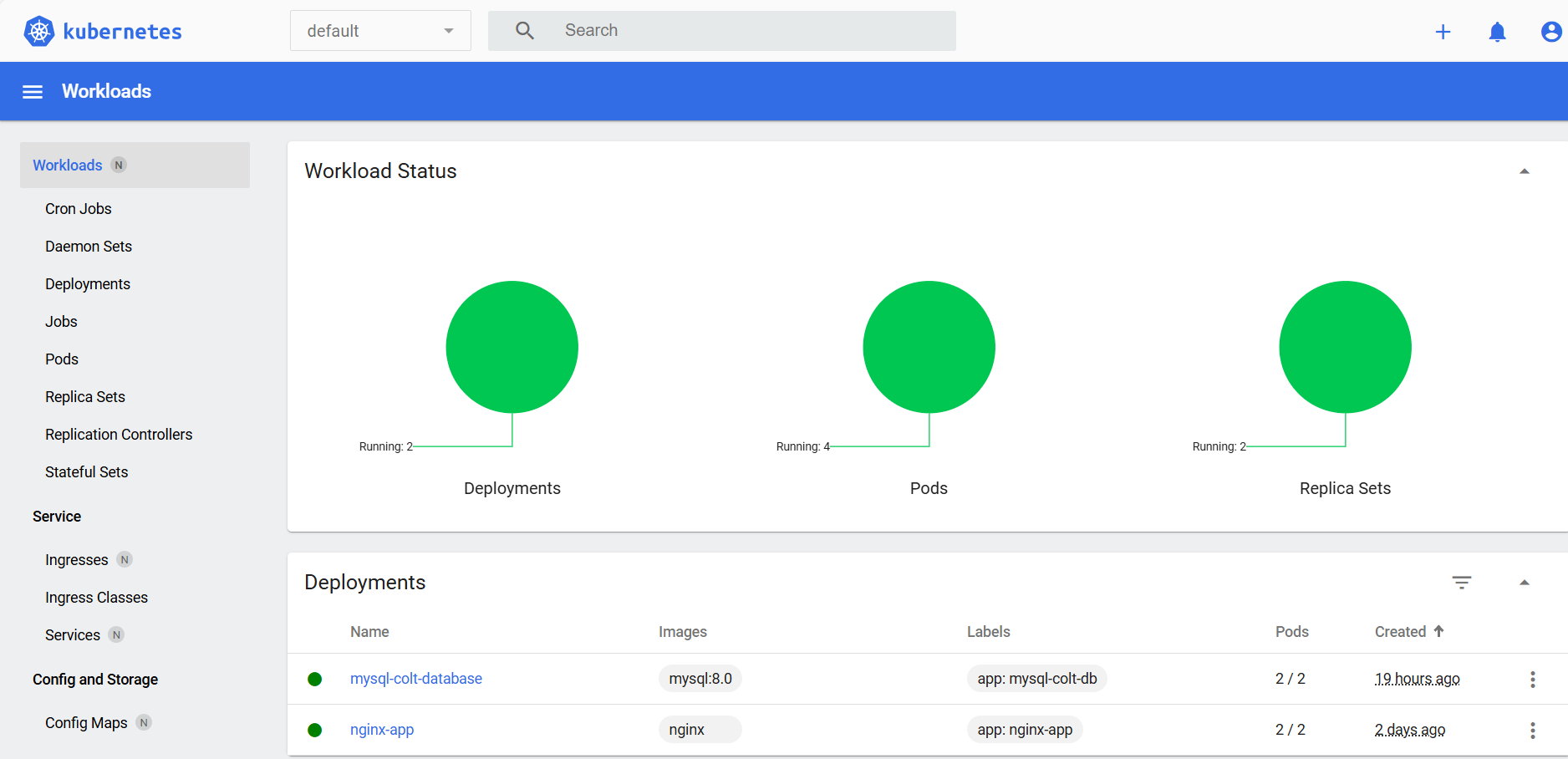

Test the Kubernetes Cluster

Directly pulling image from online repo (Eg nginx)

kubectl create deployment nginx-app --image=nginx --replicas 2

kubectl expose deployment nginx-app --name=nginx-web-svc --type NodePort --port 80 --target-port 80

kubectl describe svc nginx-web-svc

Create from deployment file (eg MySQL)

Prepare deployment file as following

vi mysql.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql-colt-database

labels:

app: mysql-colt-db

annotations:

cni.projectcalico.org/ipAddrs: "[\"10.244.100.51\"]"

spec:

replicas: 2

selector:

matchLabels:

app: mysql-colt-db

template:

metadata:

labels:

app: mysql-colt-db

spec:

containers:

- name: mysql

image: mysql:8.0

env:

- name: MYSQL_ROOT_PASSWORD

value: "testroot123"

- name: MYSQL_DATABASE

value: "mydb"

- name: MYSQL_USER

value: "testuser"

- name: MYSQL_PASSWORD

value: "test123"

ports:

- containerPort: 3306

resources:

requests:

cpu: 1000m

memory: 500Mi

limits:

cpu: 2000m

memory: 700Mi

---

apiVersion: v1

kind: Service

metadata:

name: mysql-svc

spec:

selector:

app: mysql-colt-db

ports:

- protocol: TCP

port: 3306

targetPort: 3306

type: ClusterIP

Apply deployment

kubectl apply -f mysql.yaml

Install kubernetes Dashboard

Install helm package OperationsManager

Add the Helm GPG key

curl https://baltocdn.com/helm/signing.asc | gpg --dearmor | sudo tee /usr/share/keyrings/helm.gpg > /dev/null

Add the Helm repository

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/helm.gpg] https://baltocdn.com/helm/stable/debian/ all main" | sudo tee /etc/apt/sources.list.d/helm-stable-debian.list

Update package index and install Helm

sudo apt-get update

sudo apt-get install helm

Verify the installation:

helm version

Install Kubernetes Dashboard using Helm

Add kubernetes-dashboard repository

helm repo add kubernetes-dashboard https://kubernetes.github.io/dashboard/

Deploy a Helm Release named “kubernetes-dashboard” using the kubernetes-dashboard chart

helm upgrade --install kubernetes-dashboard kubernetes-dashboard/kubernetes-dashboard --create-namespace --namespace kubernetes-dashboard

Access Kubernetes dashboard

Create admin user account

Create admin-user YAML file as following

vi admin.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

name: admin-user

namespace: kubernetes-dashboard

annotations:

kubernetes.io/service-account.name: "admin-user"

type: kubernetes.io/service-account-token

Apply file on kubernetes

kubectl apply -f admin.yaml

Patch to update “Type” to “NodePort”

kubectl patch svc kubernetes-dashboard-kong-proxy -n kubernetes-dashboard -p '{"spec": {"type": "NodePort"}}'

Note kubernetes-dashboard-kong-proxy to access Kubernetes Dashboard from worker or controller IP

kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard-api ClusterIP 10.96.137.36 <none> 8000/TCP 2d20h

kubernetes-dashboard-auth ClusterIP 10.107.151.246 <none> 8000/TCP 2d20h

kubernetes-dashboard-kong-proxy NodePort 10.103.15.133 <none> 443:31011/TCP 2d20h

kubernetes-dashboard-metrics-scraper ClusterIP 10.97.159.202 <none> 8000/TCP 2d20h

kubernetes-dashboard-web NodePort 10.106.32.217 <none> 8000:31330/TCP 2d20h

Here kubernetes-dashboard-kong-proxy NodePort is 31011.

In browser, access it via https://192.168.1.35:31011 or https://192.168.1.36:31011 or https://192.168.1.37:31011

Generate bearer token to access UI

kubectl get secret admin-user -n kubernetes-dashboard -o jsonpath="{.data.token}" | base64 -d

Enter bearer token to login on dashboard.