You can push streaming CSV logs/data into Elasticsearch using Fluentd with very simple config.

Consider following CSV data of Cars Make, Model, Year and Price.

Honda;City;2020;100000

Suzuki;Baleno;2018;100000

;Glanza;2006;50000

Ford;;2001;25000Now, to take it down into elasticsearch, prepare following config in fluentd td-agent.conf file

<source>

@type tail

path /opt/sample.csv

pos_file /var/log/td-agent/sample.csv.pos

tag data.person # Tag value

format csv

delimiter ";"

keys Make, Model, Year, Price # Fields

</source>

<match data.*> # match tag

@type copy

<store>

@type elasticsearch

include_tag_key true

host localhost

port 9200

logstash_format true

logstash_prefix csvdata # Elasticsearch Index pattern

</store>

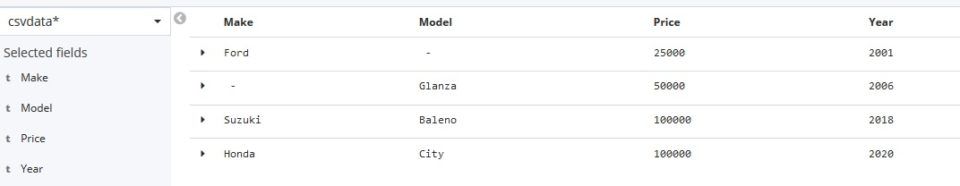

</match>Restart fluentd daemon and you will notice data in file starts going into elasticsearch. To visualize data in kibana from elasticsearch, you have to manage csvdata* elasticsearch index into Kibana Index patterns. Here is how data looks like in Kibana.